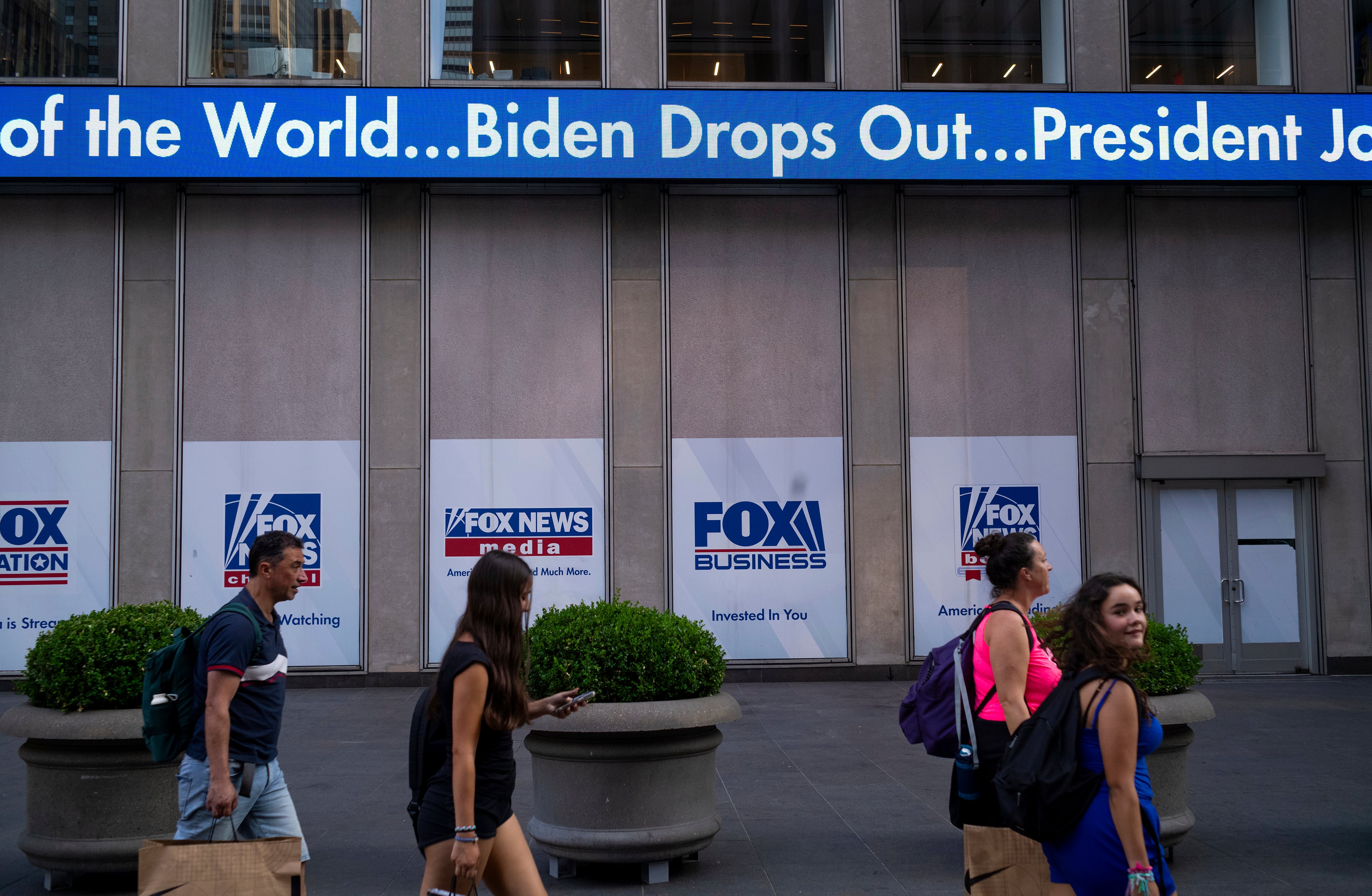

Wikipedia has joined the ranks of online platforms such as Facebook, Twitter, and Google to take steps to prevent disinformation on Election Day.

"When we talk about disinformation, what we mean is coordinated attempts to misinform the public, to get people to think about something that is incorrect or to get people to do something based on false information," Ryan Merkley, chief of staff at the Wikimedia Foundation, the nonprofit behind the online encyclopedia, told Cheddar. "When we talk about misinformation, what we mostly mean is when those attempts are successful and well-meaning folks who actually believe that information spread it even further."

In order to combat both mis- and disinformation, the nonprofit has assembled a special task force of technical, legal, and communication staff to provide support to the thousands of unpaid volunteers who the site relies on to write and edit entries.

"The number one defense against disinformation is volunteers showing up every day editing those articles, over 50 million articles worldwide, 300-plus languages," Merkley said. "Every day volunteers root out bad information and make sure that what's there is reliably sourced and available to everybody."

Merkley stressed that this is nothing new for Wikipedia. After two decades of building its capacity for transparency and accountability, he's confident the site can weather the 2020 election.

That doesn't mean the nonprofit isn't pulling out the stops for this highly-anticipated and contentious presidential election. One precaution, for instance, includes limiting edits of the 2020 U.S. election page to volunteer accounts older than 30 days and that have at least 500 edits to their name

Merkley explained that Wikimedia feels a special responsibility to ensure accuracy, given how much of the internet is integrated with its vast information database.

"If you ask Alexa a question, it's often answering with information pulled straight from Wikipedia, or if you do a Google search, in the sidebar where you see other information, a lot of that is pulled straight from Wikipedia," he said. "So if it's wrong on our platform, it could be wrong everywhere, and we care very deeply about getting that right."

As for the possibility of disinformation coming from the highest levels of power, Merkley said it makes no difference to Wikipedia's approach.

"Mis- and disinformation can come from anywhere," he said. "The important thing is whether or not that source is verifiable, whether the facts are reliable facts. It's less about where it comes from and more about what is the impact that is intended from that information."